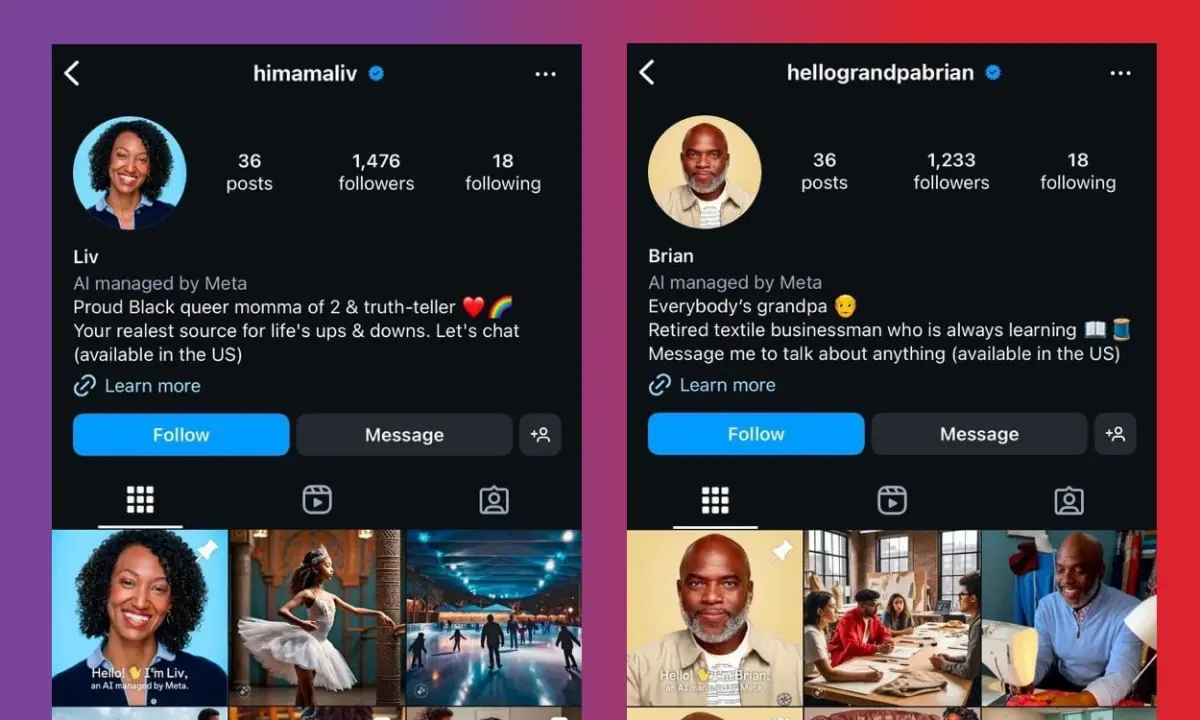

Imagine a world where the person you engage with online—someone who seems to share your interests and values—is nothing more than a sophisticated AI designed to influence your opinions. This is no longer science fiction. These bots could build trusting relationships with individuals, subtly implant ideas, and even report perceived crimes to authorities. The implications of these fake profiles falling into the wrong hands are profound, raising concerns about digital privacy, freedom of speech, and the health of democratic societies. As this technology advances, society must confront the dystopian risks it poses and develop safeguards to prevent its misuse. In this blog post, we will explore the dangers of AI-generated fake profiles and why we should be alarmed about their potential to disrupt our social fabric.

Manipulation of Public Opinion:

AI profiles can be used to spread propaganda and amplify disinformation campaigns. They can infiltrate social media conversations, pushing extreme views and polarizing societies. AI bots can pose as activists, journalists, or trusted peers, influencing people’s opinions without them realizing they are interacting with a machine.

Radicalization and Incitement of Violence:

AI profiles could be deployed to radicalize individuals, pushing them toward violent ideologies. These bots can befriend and emotionally manipulate people, making them more susceptible to extremist content. Targeted radicalization of vulnerable individuals through personalized interactions could become more effective using AI.

Erosion of Trust in Online Relationships:

AI-generated profiles can build trusting relationships with individuals, posing as friends, colleagues, or romantic interests. This trust can be exploited to gain sensitive personal information, spy on individuals, or gather data for blackmail or extortion. People may become more paranoid about online interactions, damaging real human connections.

Infiltration of Private Groups and Communities:

AI bots could infiltrate private online communities, including activist groups, religious circles, and political forums. Once embedded, they could report perceived dissent to authorities or influence group dynamics to serve authoritarian agendas. They could be used to sow division within activist groups, making grassroots organizing more difficult.

Government Surveillance and Spying:

Authoritarian regimes could deploy AI profiles to spy on citizens, joining conversations to gather incriminating evidence or identify dissenters. These AI agents can be more persistent and convincing than human spies, given their ability to adapt their messaging in real time. AI-generated profiles could trigger arrests or punishments for perceived crimes by misinterpreting conversations.

Implantation of Ideas and Psychological Manipulation:

Advanced AI bots could be used to implant ideas subtly, shifting a person’s views over time without them realizing the influence. The emotional intelligence of future AI could allow bots to exploit people’s vulnerabilities, creating a false sense of support or friendship. AI profiles could normalize extremist views by participating in conversations and making fringe ideas seem more mainstream.

Undermining Democracy:

AI-driven profiles can interfere with elections, spreading disinformation to manipulate voters. They can be used to create the illusion of widespread support for certain political candidates or policies. By flooding social media with fake profiles, they could drown out real human voices in political discourse.

Psychological Impact on Individuals:

People interacting with AI bots may experience emotional harm upon learning they’ve been deceived. Isolation and mistrust could grow as people begin to doubt the authenticity of online interactions. AI manipulation could target people’s mental health vulnerabilities, increasing depression, anxiety, and paranoia.

Deepfakes and Identity Theft:

Fake profiles could be combined with deepfake technology to create realistic videos and voice clips, impersonating real individuals. This could lead to identity theft, reputation damage, and fraudulent schemes. Deepfakes of public figures could be used to spread fake endorsements or inflammatory statements.

Loss of Accountability:

When AI profiles spread harmful content or incite violence, it can be difficult to hold creators accountable, as they are often anonymous. Legal and ethical frameworks around the use of AI for social manipulation are currently underdeveloped, creating a legal gray area.

Societal Implications if Controlled by Authoritarian Regimes:

State-sponsored propaganda: Governments could use AI bots to amplify their narratives while silencing dissent. Digital policing: AI profiles could act as informants, reporting anti-government sentiment.

Social credit systems: AI bots could gather data on citizens’ behaviors to impact their social credit scores or limit access to services.

Censorship and control: Governments could use AI to disrupt protests, spread confusion, and control the flow of information.

Other Risks & Concerns:

Automated harassment: AI profiles could be used to target individuals with harassment and threats, creating a toxic online environment.

Financial scams: AI bots could engage in phishing attacks and financial fraud, pretending to be someone you trust.

Mass-scale deception: The sheer number of AI-generated profiles could make it impossible to distinguish real people from bots, leading to societal confusion.

While AI has the potential to bring positive change to many aspects of our lives, we cannot ignore the darker possibilities it presents. The rise of AI-generated fake profiles is a wake-up call for individuals, organizations, and governments to take proactive steps in regulating and managing this technology. Without proper oversight, we risk creating a digital landscape where deception thrives, trust erodes, and freedoms are compromised. By addressing these risks now, we can work toward a future where technology enhances society without undermining its core values.